Prestigious NSF award funds augmented reality research

Ann McNamara

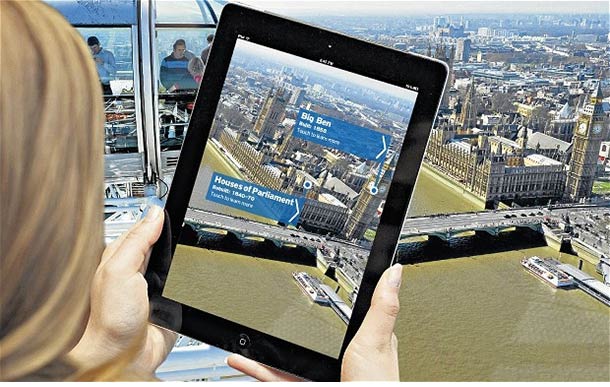

Imagine navigating an unfamiliar city street aided by a mobile device that follows your gaze, annotating the landscape and instantly identifying landmarks, or visiting a museum with a similar tool that identifies and interprets each exhibit as you peruse the collection.

This technology combining real and virtual worlds, "mobile augmented reality," is the subject of a five-year, $529,862 study led by Ann McNamara, assistant professor of visualization at Texas A&M University and 2013 recipient of the National Science Foundation's prestigious [Faculty Early Career Development Award] (http://www.nsf.gov/funding/pgm_summ.jsp?pims_id=503214) promoting junior faculty development and research of the highest quality.

McNamara's mobile augmented reality research will focus on developing a labeling system that will unobtrusively display identifying elements on a mobile device screen in scenes captured by its camera.

“If you were a first-timer exploring Paris, you could hold up your tablet or phone and labels annotating the surrounding buildings would appear on the on the tablet’s screen, such as the name of a bank, a store, the Louvre, or a Metro station,” she said.

How the labels are presented, she added, is crucial to their effectiveness.

“A display with excessive or disorganized elements can lead to visual clutter, causing confusion, making it more difficult to understand a scene,” said McNamara. “The labels in a scene also need to be in the immediate vicinity of where the object is on the screen.”

The challenge, she said, is creating a system that can best display the labels, then determining how quickly the labels should appear and disappear, their size and how many should be on the screen at a time in a way that’s neither distracting nor obtrusive,

McNamara will also collaborate with experts at the Houston Museum of Fine Art on developing new techniques for directing a viewers’ gaze to particular points of interest in paintings — a guided visualization known as "scene navigation" achieved through subtle gaze direction techniques. Stephen Caffey, assistant professor of architecture at Texas A&M, is also collaborating on this project.

“Imagine a student holding a tablet computer in front of an artifact or image and instantly, that object is virtually annotated with more information,” she said. “The overlay may include virtual text, images, web links or even video. I will be researching how to deliver augmented elements reflecting the interest of the user without cluttering the visual field or obscuring elements critical to the learning task.”

A member of the Texas A&M Department of Visualization faculty since 2008, McNamara's research focuses on the advancement of computer graphics and scientific visualization through novel approaches for optimizing an individual's experience when creating, viewing and interacting with virtual spaces. She holds a Ph.D. in computer graphics and a B.S. in computer science from the University of Bristol, United Kingdom and an M.A. in education from the University of Dublin, Ireland.

McNamara is the third member of the Texas A&M College of Architecture faculty to receive an NSF fellowship promoting junior faculty development and the integration of research and teaching. Sam Brody, professor of urban planning, received the NSF Career Award in 2003 for his work developing new, sustainable techniques for coastal flood mitigation and watershed management. A five-year NSF Presidential Faculty Fellowship was presented in 1993 to Louis Tassinary, professor of visualization, for his research and teaching at the interface of health and design exploiting psychophysiological methods.

Tags

Related Posts

Study eyes how STEM activities influence kids

Viz ranked as top public program by College Magazine

Teacher 'making’ workshop fosters STEM education

Oct. 23 symposium spotlighted college, faculty research

Texas A&M game design program among globe’s elite

Follow Us

Facebook Twitter Vimeo Youtube Flickr RSS

Recent Posts

Planning prof heads study of disaster housing aid

A message from the dean

Former student remembered as expert planner

Leading educator named new head of Architecture Dept.

Texas A&M visualization professor Ann McNamara is investigating how to improve the performance of mobile augmented reality applications like the one demonstrated above.

Texas A&M visualization professor Ann McNamara is investigating how to improve the performance of mobile augmented reality applications like the one demonstrated above.

_thumbnail_small.png)